Written by Martin Luerrsen, CTO at Clevertar

True story: A good two decades ago, after I had barely started my career, someone casually asked me what I do for a living. “Artificial intelligence”, I excitedly replied. Awkward silence followed. Finally they spoke again. “I’m not sure what to think of someone who believes in UFOs.”

The confusion was perhaps warranted. At the time, AI was something seen in science fiction films with aliens and spaceships, far removed from daily life. Things have evolved significantly since then. Unless you’re a mountain-dwelling recluse, you’ve probably encountered AI at some point today: your phone, your car, your home are packed with it. Entire nations are intensely vying for AI supremacy and, as with the very technology driving the previous cold war, plenty of sharp minds are also utterly convinced AI will bring about the extinction of humanity. But all of that still feels like background noise – it may easily pass you by if you don’t pay attention. With what’s next, though, you won’t have that luxury any longer.

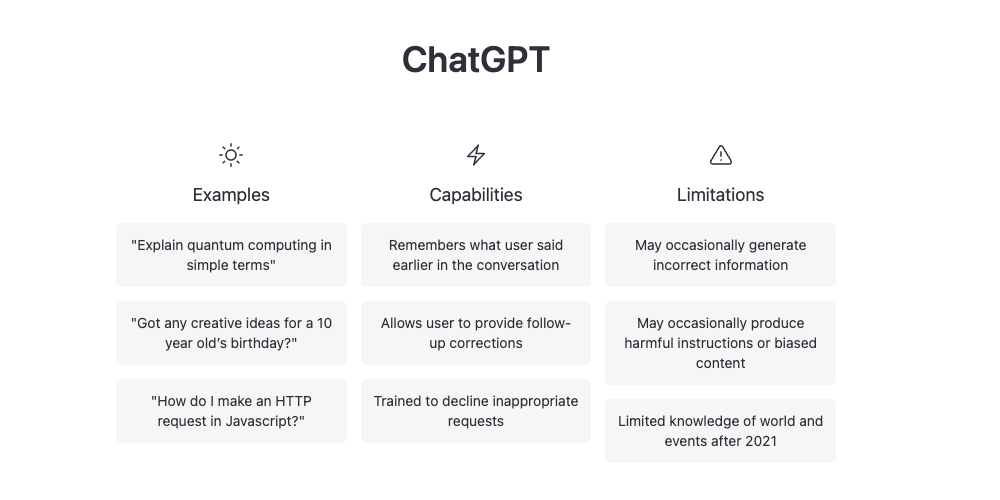

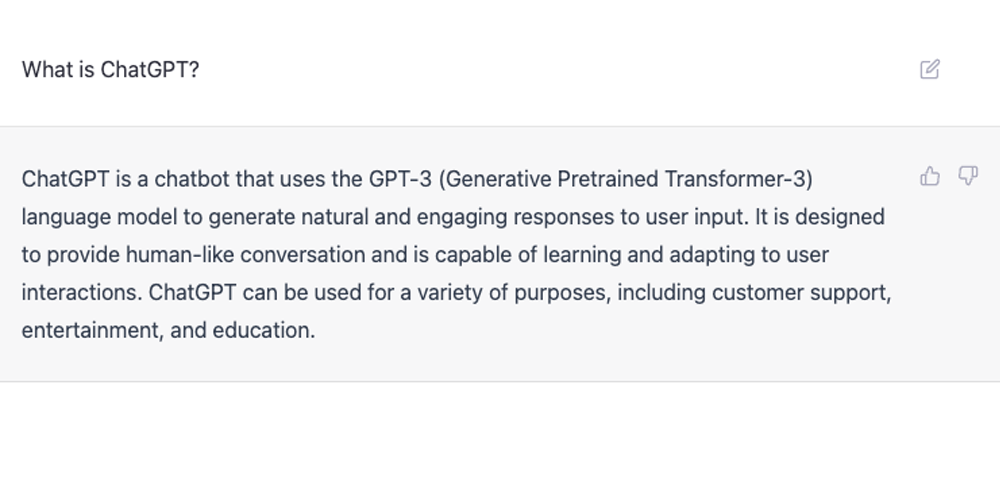

The hottest thing in AI right now is OpenAI’s ChatGPT. It’s a chatbot, and it’s powered by artificial neural networks (ANNs). In some ways, that immediately feels ancient.

The first real chatbot, ELIZA, was from the 1960s. Artificial neurons were conceived as far back as 1943, right around the birth of the computer. Both fields were repeatedly hit by intellectual setbacks that made them seriously uncool to entire generations of researchers, and progress was anaemic for decades at a time. But persistence sometimes wins out. When researchers noticed that the hardware they used for playing 3D games also ran ANNs really well, ANNs finally gained an edge over other methods. With renewed interest, more money, and brilliant minds working on ANNs once more, progress has been brisk ever since.

It’s easy to see that progress in the size of ANNs. Although they’ve improved in other ways, too – bigger is certainly better here. The basic backprop network from 1986, the sort you get first taught in any ML course had 144 parameters; the 2012 benchmark-crushing image classifier AlexNet that arguably turned the tide towards ANNs had 60 million; Megatron-Turing, a current gen large language model (LLMs), has 530 billion. No matter how you plot it, you’ll end up with something like an exponential – and those are famously hard to grok. With technology, it usually means that it’s an obscure nerdy hobby at first and then it replaces everything you know. It’s not hard to guess what stage we’re at.

With OpenAI’s recent release of ChatGPT and updated InstructGPT, this would still be true if all progress were halted tomorrow.

The capabilities of these LLMs are already staggering – the Internet continues to fill up with examples as we speak. It’s hard to ring-fence what they can’t do: write an essay, a poem, a software program, or debate a philosophical, political or personal point – if it’s language, it’s fair game.

This year also bestowed upon us some amazing text-to-image models such as DALL-E and Stable Diffusion, but their output is often – albeit perhaps not always – still distinguishable from a human artist due to small mistakes (such as poor rendering of hands). That isn’t something we really see with ChatGPT, where, in direct comparison, your average human just isn’t playing in the same league anymore. Chatbots, long synonymous with AI being dumb, are finally having the last laugh, too.

That’s not to say it’s all sunshine and rainbows. LLMs like ChatGPT are trained on a large dump of the Internet and thus embed a lot of the flaws and biases of digital humanity. Concern and alarm about this accompany every LLM release like clockwork, which fails to account how much recent progress has been made at aligning AI output with what we consider to be good, in all senses of the word. Compared to just a year ago, OpenAI’s LLMs are vastly better at doing exactly what you want them to, not making stuff up (as much), and rejecting indecent proposals. ChatGPT is another massive step forward here, most evidently indicated by it somehow not being roundly condemned like Meta’s recent Galactica. On that trajectory, if anything, it feels like every future LLM will be politically correct to a fault, and current doomsayers just seem to lack imagination.

LLMs still struggle at applying common sense, though. One example I recently encountered is where GPT-3 insisted to me that 29 hours is longer than 38 hours, no matter how I phrased the problem. ChatGPT got that one right but bungled the explanation with some bad maths. Even though LLMs may easily outperform humans on numerous challenging tasks, they’re also occasionally weird and wrong in a way that a human never would be. This conjures up a famous philosophical thought-experiment known as the Chinese Room: simply put, does the reordering of language symbols into coherent sentences signify any real understanding? With an LLM, we know there isn’t any deep inner life here – the model just outputs the most probable word one at a time. And yet you can have a very detailed conversation about the real world, a world the model has never experienced, and argue about feelings and thoughts that we regard as quintessentially human. Google LaMDa AI convinced someone to bat for it to obtain legal personhood. Many people will be fooled in the same way. It’s as predictable as night following day. What is perhaps only surprising here is how much of a simulacrum of sentience – and, indeed, the entire human condition – can be achieved from simply reading the Internet.

Nevertheless, art and language are generally viewed as hallmarks of human identity, and LLMs have made serious inroads into that.

We’re perhaps not as special in this regard as we thought we were. Artists are naturally worried that this is a direct threat to them, but the biggest disruption will be all about language. Language is used for storage, communication, and reasoning, for everything from shopping to science to organised superstition. No business or government in the world could operate without it. Language goes in, language comes out – if you’re a white-collar worker, there’s probably not much else you do every day. With LLMs, this can now be automated with much of the same proficiency of a computer replacing mental arithmetic: every language-related task can be done much faster, much better, much more consistently and cheaper, and with less effort. Copywriting and coding assistance were some of the first applications using LLM for widespread success, but given the latest capability improvements, a flood of new applications should be anticipated soon.

The dawn of an industrial revolution, comparable to any witnessed in the past, is upon us, and its ramifications for our society will be far-reaching.

It won’t be because LLMs (and AIs in general) will directly replace every human being – or even any at all. In fact, humans working side-by-side with expert AI assistants to perform various tasks have been shown to produce superior performance compared to either the AI or a human alone. Rather than being fearful of the robotic ‘competitors’, we will simply figure out how to best team up. What humans are still good at (and AIs aren’t) will become all the more valuable in this brave new world. Make no mistake, though, this symbiotic partnership will be unrivaled in its productivity and impact, with all the consequences that entails for everyone else. Those who don’t follow suit are likely to be left behind – and may ultimately lose their livelihoods. Unlike in days past, it may no longer be possible to ignore the presence of AI in our midst.

(This article was written without the help of ChatGPT. Treasure it for its future rarity.)

Explore AI with us

It’s never too soon or too late to explore the world of artificial intelligence!

We’re keen to discuss how your business’ everyday problems could be solved with AI, and how they might positively impact your customers.

Please leave a message and we’ll get back to you soon.