Written by Martin Luerssen

CTO at Clevertar

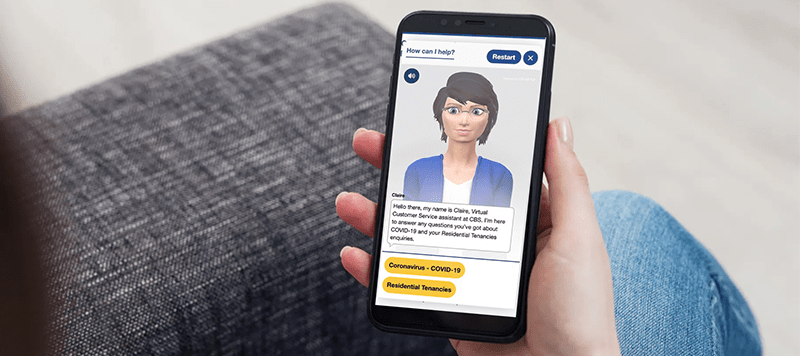

Our team at Clevertar has just put the finishing touches on a service upgrade that will make our 3D virtual agents much faster and more capable at handling large volumes of traffic. We achieved this by doubling down on a strategy that we’ve pursued since our earliest days so many years back: processing information close to the ‘edge’.

Unlike many other chatbots, Clevertar software does most of the hard work on the spot—on your device—and not on some distant server thousands of kilometres away. Just as with other forms of “edge computing”, there are big advantages to this, alongside some noteworthy caveats. In fact, chatbots are a very instructive example of the complex trade-offs involved in choosing between “cloud” and “edge”. Since chatbots are our bread and butter here at Clevertar, it’s a great opportunity to delve into this interesting topic.

So, what is ‘edge computing’?

A quick refresher on edge computing today

As the name suggests, it’s computing done at the ‘edge’, or close to the data source (instead of remotely in the “cloud”). It’s also a fashionable term to describe all that computing territory yet uncaptured (but substantially desired) by the big cloud providers such as AWS and Azure.

And it’s exactly where we believe chatbots should hang out.

Over the course of the last decade, a lot of computing has moved into data centres operated by the big-name providers mentioned above. These days you don’t need much more than a browser and an internet connection to do, well, virtually everything, with much of the logic executed in the cloud. We seem to have moved back half a century to the era of central mainframes and dumb terminals, that bygone era just prior to the rise of personal computing.

Of course, personal computers haven’t gone away; indeed, they’ve proliferated into a vast range of mostly smaller devices, such as phones and tablets. Courtesy of frustratingly limited lifespans, none of these are properly slow, and many can crunch numbers better than a PC. Any Software-as-a-Service provider will look at all that spare capacity with some longing, because cloud computing does not come cheap. With appropriate redundancy in place, a typical cloud application will quickly churn through server time and dollars.

But if the computing can be done on the device, aka on the “edge”, big savings are to be found. Not only is the customer paying for the hardware and electricity, but they’re doing it with a smile on their face!

The application can also stay fast and smooth no matter its popularity as capacity scales directly with every new customer.

Putting the money where our chatbot is

Clevertar’s edge-centered architecture brings all of these advantages into one design, so that we can keep our cloud footprint much smaller than would otherwise be possible. By far the biggest impact is felt with our trademark 3D virtual agents that embody the chatbot through speech and animation. When we render our 3D on the edge, we reduce operating by a massive 99+%, while users benefit from reduced bandwidth demands and a better experience overall. It’s a different worldview, really. Although game-streaming services such as Stadia have demonstrated that rendering and streaming rich 3D media from the cloud can be made to work at a commercial scale, “edge” case studies like our own point to a leaner, meaner future.

Our reliance on edge computing also helps reduce lag, which is a major impediment to any good conversation. Real conversations are highly interactive and rely on subtle, time-sensitive cues for real-time feedback and turn-taking.

Yet even those very artificial conversations you might have with virtual assistants such as Alexa and Siri will feel noticeably impacted by any lag. When relying on the cloud, as these assistants do, some of this lag is simply unavoidable—the speed of light is a fundamental constraint here. Conversely, that lag can be virtually eliminated by moving the work nearer to the source.

With a chatbot, however, this is currently only practical if you avoid the use of complex machine learning (ML) models, which are needed for natural language, speech, and image input. State-of-the-art models in this space tend to be huge, with billions of parameters. But more targeted, optimised models can be realistically deployed into native apps (although downloading to a browser is still a bit too much to ask for). In the latter case, edge computing means either avoiding natural inputs (e.g., through the use of multiple choice) or embracing a hybrid approach, which is still highly valuable, as it can reduce overall system complexity – and hence improve efficiency and robustness – by only relying on a select set of stateless and scalable capabilities from the cloud.

Walking the line between privacy and superior chatbots experiences

Sharing less data with the cloud implies better privacy by default, another great benefit of edge computing. Many of us who have smart home speakers would be much less inclined to use them if they streamed every sound to their makers, and not just after “OK Google” or “Hey Siri” is spoken. If a chatbot only shares data for specific tasks that technically demand them, and not just everything, that’s a far more acceptable privacy compromise for many of us. Naturally, there’s a hunger for data by any application, Clevertar applications included, as this data is the foundation for better future services. The trick is to navigate the delicate trade-off between one’s privacy and the quality of the service, something edge computing is inherently well suited to do.

Likewise, no single, perfect approach exists for “edge” versus “cloud” computing, because each has specific capabilities and useful traits that cannot be replicated otherwise, but that might also change as new technologies arise. Clevertar has achieved some recent performance breakthroughs by building out our edge-based software, but we will continue to explore both decentralised and centralised designs in order to analyse and optimise the advantages of each. This may imply that we will never neatly fit into either category.

We think that’s an acceptable price to pay for delivering the best possible chatbot service on the market.

Explore AI with us

It’s never too soon or too late to explore the world of artificial intelligence!

We’re keen to discuss how your business’ everyday problems could be solved with AI, and how they might positively impact your customers.

Please leave a message and we’ll get back to you soon.